Comprehensive Data Archive Network

A video showcasing the Wolfram Language has been doing the rounds. It makes for an impressive demo, but not nearly impressive enough for me to commit to a proprietary programming language that only runs on a single vendor’s platform. Nonetheless, there are a lot of impressive features there.

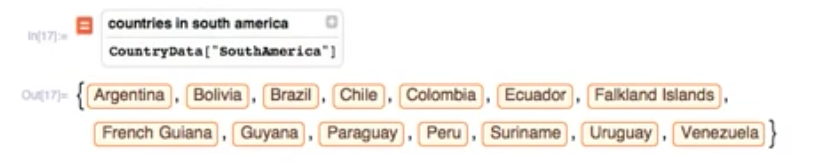

A major one is the integration of curated data sets of real-world knowledge, meaning that unlike most programming environments the Wolfram Language comes with some knowledge of the world built in. The video includes an excellent demo of this, starting with a list of South American countries:

Wolfram then goes on to look up the flags of these countries, pull out the dominant colours, and so on. While these are all things that could be done in almost any language, you’d have to find the country list from somewhere, massage it into a useful format, and import it into your code. Having this kind of general data there at your fingertips removes a point of friction for many tasks, and seeing the demo got me thinking.

One of the big practical advances in programming that has occurred since I’ve been doing it seriously is the rise of the package manager. Starting with the venerable CPAN for Perl, almost every language worth it’s salt has a more-or-less well developed package system - Ruby Gems, Python’s PIP, npm on Node.js, and so on. This made me wonder: why isn’t there such a thing for data?

Off the top of my head, what I’m after would have the following characteristics:

- Language-independent. If I need to tie an R list of countries to a Python list of flags, we’re pretty much back to square one.

- Standardised format. XML would be an obvious choice, as would JSON. The actual details would be hidden behind language-specific implementations - as a programmer, you’d just see objects.

- Distributed. You should be able to pick and choose data sources, in the same way you can choose APT repositories in Debian. There would be a few well-known, trusted sources to get you started, but you could add in more specialised collections.

- You should be able to refer to data by natural, symbolic names (e.g. “Countries”, “Mountains”) or specific URIs (“http://example.com/geography/countries/v1.1”). Handling this sensibly in the context of the previous point is probably the hardest aspect.

- Data should be cached, versioned, and there should be some kind of simple scheme to get aggregate data.

With all this in place, I could just fire up my Python (or Ruby or Node) shell and do the following:

>>> import cdan

>>> cdan.get("Countries", "Capitals")

[ ("Afghanistan", "Kabul"), ...]

These are just rough ideas, with a lot of hand-waving, but I think there’s something there. Moreover, I’d be amazed if I was the first person to think of this. Far more likely is that something similar already exists and I’ve just not come across it.

That’s where you come in, dear reader. If you know of something like this that I’ve missed, please let me know via Twitter or mail, and I’ll update this post with any interesting links.

Update: Thanks to @semapher for pointing me at the Open Knowledge Foundation. In particular, their CKAN project looks a step in the right direction, but it’s not quite in the form described above. Worth keeping an eye on, though.